Clustering Images with Autoencoders and Attention Maps

(Note: You can find the full notebook for this project here, or you can just scroll down to see the cool images it makes.)

I recently approached a new project where I wanted to create a model that sorted images into similar, automatically-generated groups. I hadn’t done an unsupervised clustering project with neural networks before, so this idea was intriguing to me. I looked through the Keras documentation for a clustering option, thinking this might be an easy task with a built-in method, but I didn’t find anything. I knew I wanted to use a convolutional neural network for the image work, but it looked like I would have to figure out how to feed that output into a clustering algorithm elsewhere (spoiler: it’s just scikit-learn’s K-Means).

Why not just feed the images into KMeans directly? Well, it could work, but the number of features would be out of control for my processing abilities, even after renting a GPU. My dataset was 200,000 images of faces from IMDB and Wikipedia, centered and pre-cropped around each face. Even with the nicely formatted data, the preprocessing was fairly extensive, and eventually included resizing all the images to 150×150 pixels. With three channels (RGB), that means (150x150x3) = 67,500 features and 200,000 examples. That’s a lot of information, and a lot more than we need to cluster effectively.

The solution I found was to build an autoencoder, grab an attention map (basically just the compressed image) from the intermediate layers, then feed that lower-dimension array into KMeans. Okay, so what does that mean?

Autoencoder

The basic idea behind a CNN autoencoder is that you take the training data, feed it through alternating convolutional and pooling layers until you get to the desired compression, then feed it through convolutional and upsampling layers until you get back to the original size. You set the output to be the same as the input, so the model is learning to compress and unpack images to get them to be as close to the original as possible. My model looked like this:

CONV → MaxPooling → CONV → MaxPooling → CONV → MaxPooling → CONV → UpSampling → CONV → UpSampling → CONV → UpSampling → CONV

I made sure the padding and pool shapes lined up so the output was the same dimension as the input, then trained it on X_input = X_output. Voila! A lot of information is lost in the process, but luckily we’re not using this solely as an image compression technique.

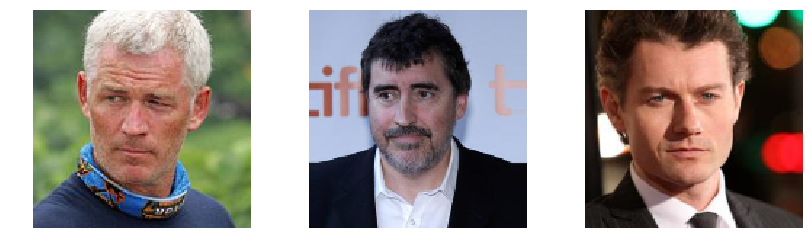

Before encoding:

After decoding:

After decoding: The idea here is that the autoencoder is capturing the essence these images. Ideally it is keeping only the most important features. And it should be noted that nothing about this model is trained on finding the faces — this clustering works because the images all have a similar formatting. It could be modified to work on a model that is specifically trained on finding face components, though.

The idea here is that the autoencoder is capturing the essence these images. Ideally it is keeping only the most important features. And it should be noted that nothing about this model is trained on finding the faces — this clustering works because the images all have a similar formatting. It could be modified to work on a model that is specifically trained on finding face components, though.

So I have a model that both encodes and decodes images. I want the compressed image, so I have to grab the intermediate output, i.e., the output from the last pooling layer before decoding begins. This turned out to be easier than I thought, and takes just one line of code:

get_encoded = K.function([auto_model.layers[0].input], [auto_model.layers[5].output])

But don’t worry about the code. All this does is run the model from layer 0 to layer 5 — the encoding portion. The output of this compression process is an array of shape (19, 19, 8). So we’ve gone from 67,500 features to (19x19x8) = 2,888. Much better. We could have started with larger images and compressed more before grabbing the output. Can we visualize this compressed array to see what we’ve got?

Attention Map

One of the main criticisms of neural networks is that they are very black-boxy. It’s hard to figure out why they do what they do. With image data, however, we have a convenient way of seeing which values the convolutional layers pass on to the final layers of a model. For our autoencoder, these internal layers are actually the whole point of the model. So let’s run the model just through the encoding layers (or for a supervised model, you could run just as far as the final convolutional layer).

Now the problem is that the output has too many channels to visualize properly (remember, our array is 19x19x8). We can just pool once more over the final dimension (like, encoded_array.max(axis=-1)) to get an array that is (19x19x1). This should tell us which pixels are important, although we do still lose the information as to why they are important (that is contained in the dimension we just pooled over). Here’s what it looks like:

Cool. Then model is mostly interested in bright spots and solid colors and celebrities. Now let’s actually do something with those compressed images.

Cool. Then model is mostly interested in bright spots and solid colors and celebrities. Now let’s actually do something with those compressed images.

Clustering

Here we go! K-Means seems like the most straight-forward model for the task, and I found that 25 clusters gave enough variety without making the clusters overly broad. This part of the project is as simple as plugging the encoded array, shaped (200,000, 19, 19, 8), into scikit-learn and grabbing the labels as the output.

Here are images from a few of the clusters it created:

Cluster 1:

Cluster 5:

Cluster 5:  Cluster 12:

Cluster 12:  Cluster 19:

Cluster 19:  Cluster 24:

Cluster 24:  Okay, this is pretty cool, but with reservations. While some elements are obvious (Cluster 24 is low-lit, Cluster 19 has white backgrounds, Cluster 12 is more gray/neutral), it’s hard to tell what the defining features are for each cluster from just a few images. Remember, each cluster has 4,000 – 15,000 images. This is way too many to look through by hand (by eye?). We could average all the images themselves, but I think it would be better to average the encoded versions of all the images so we’re looking at the same thing the clustering algorithm was looking at.

Okay, this is pretty cool, but with reservations. While some elements are obvious (Cluster 24 is low-lit, Cluster 19 has white backgrounds, Cluster 12 is more gray/neutral), it’s hard to tell what the defining features are for each cluster from just a few images. Remember, each cluster has 4,000 – 15,000 images. This is way too many to look through by hand (by eye?). We could average all the images themselves, but I think it would be better to average the encoded versions of all the images so we’re looking at the same thing the clustering algorithm was looking at.

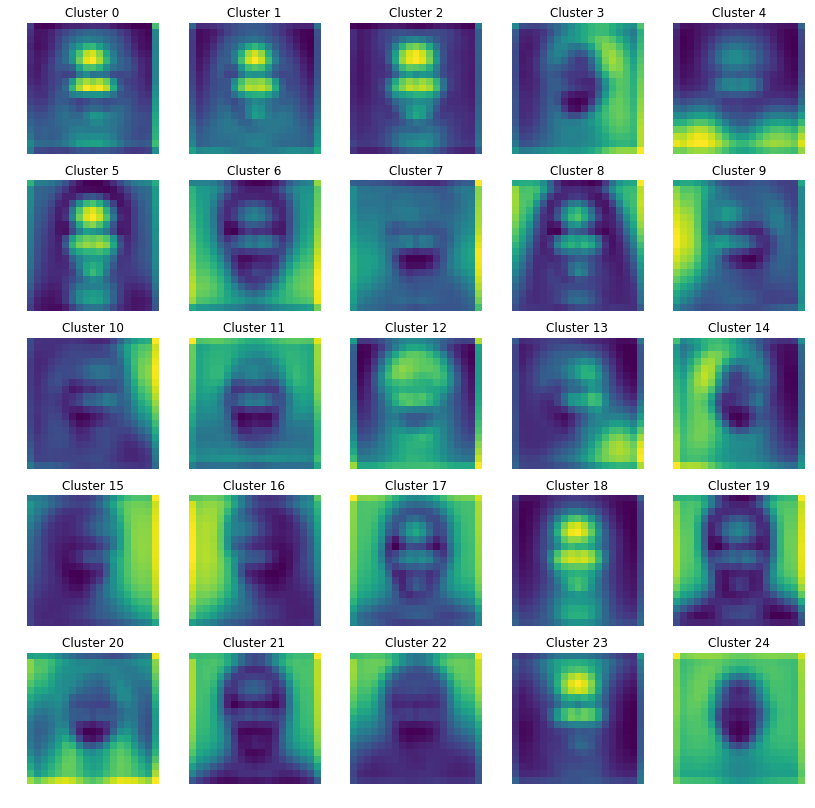

Again, it’s just one line of code, this time taking the mean over the first dimension (axis=0). Here’s what the average encoded image looks like for each cluster:

Alright! Now we’re getting somewhere. These clusters are clearly distinct from one another, so something is going right. The last thing I want to do is see what these averages look like as full RBG images. How we can make an encoded image into a full size image? Decode it! So we’ll just run these averaged images through the second half of our autoencoder model to bring them back to life. Here we go!

Alright! Now we’re getting somewhere. These clusters are clearly distinct from one another, so something is going right. The last thing I want to do is see what these averages look like as full RBG images. How we can make an encoded image into a full size image? Decode it! So we’ll just run these averaged images through the second half of our autoencoder model to bring them back to life. Here we go!

And immediately I realize I’ve created something creepy. Really cool, but creepy. This makes it a lot clearer what each cluster is looking at. For this dataset and how I’ve built the autoencoder, it mostly focuses on lighting and shape, but it’s pretty obvious that this could be used to profile by skin color or other physical feature. Of course, it could also be used to separate unlabeled, non-face images into groups. Given the amount of unlabeled data in the world (which is a big deal in the autonomous vehicle world), this could be a really useful technique.

And immediately I realize I’ve created something creepy. Really cool, but creepy. This makes it a lot clearer what each cluster is looking at. For this dataset and how I’ve built the autoencoder, it mostly focuses on lighting and shape, but it’s pretty obvious that this could be used to profile by skin color or other physical feature. Of course, it could also be used to separate unlabeled, non-face images into groups. Given the amount of unlabeled data in the world (which is a big deal in the autonomous vehicle world), this could be a really useful technique.

This is also a good reminder of how powerful these techniques are. I was able to build this model on my own, in a couple weeks, using only publicly-available data. The sophistication of similar techniques at Google or Facebook must be staggering.

Thanks for reading! Feel free to leave feedback or comments or links to a project you’ve done like this one. Happy clustering!

This is an excellent use of a convolutional neural network for image recognition, with the architecture clearly mapped out. Great work Max!